You know those “year in review” things that apps like Spotify provide their users? They reveal your usage; how you engaged, things you did or said, things you liked, etc.

Usually this is presented to you as a bunch of numbers designed to make you feel good about your engagement and elicit, you guessed it, more engagement.

But with AI, this has landed differently for me. I don’t have social media, so the only “year in review” I get is Spotify – which honestly, I don’t mind. It’s fun seeing how much I listen to Ken Yates (check him out).

ChatGPT and Loveable have both provided me with my 2025 breakdown, and I think it’s time to call it quits; I’ll explain in a second, but first…

The Numbers

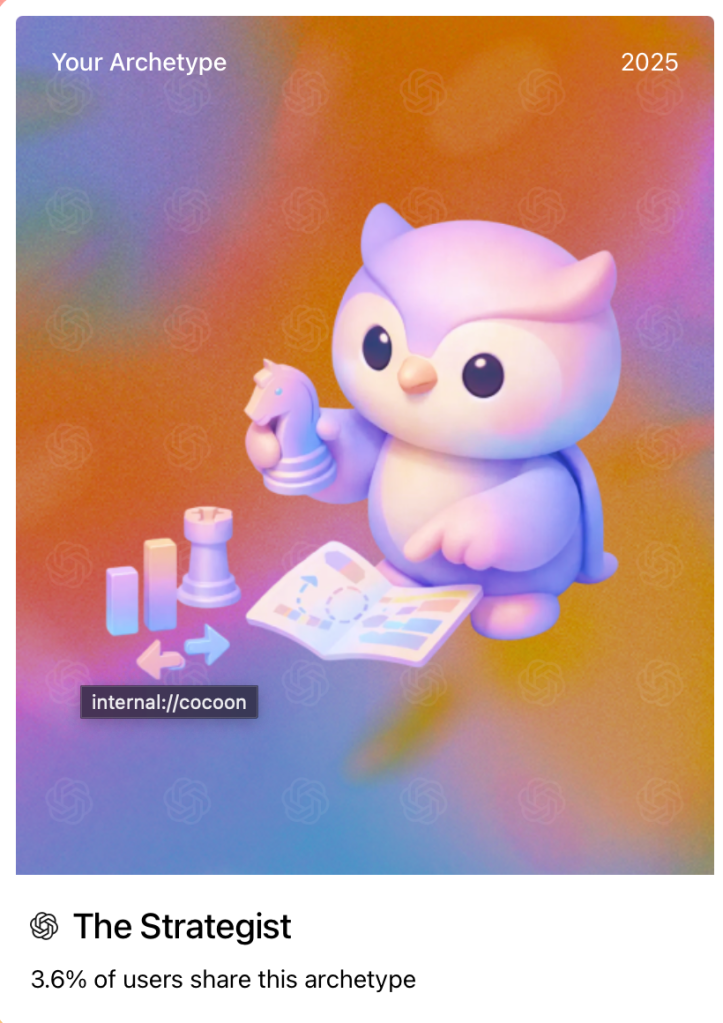

ChatGPT

____________________________________-

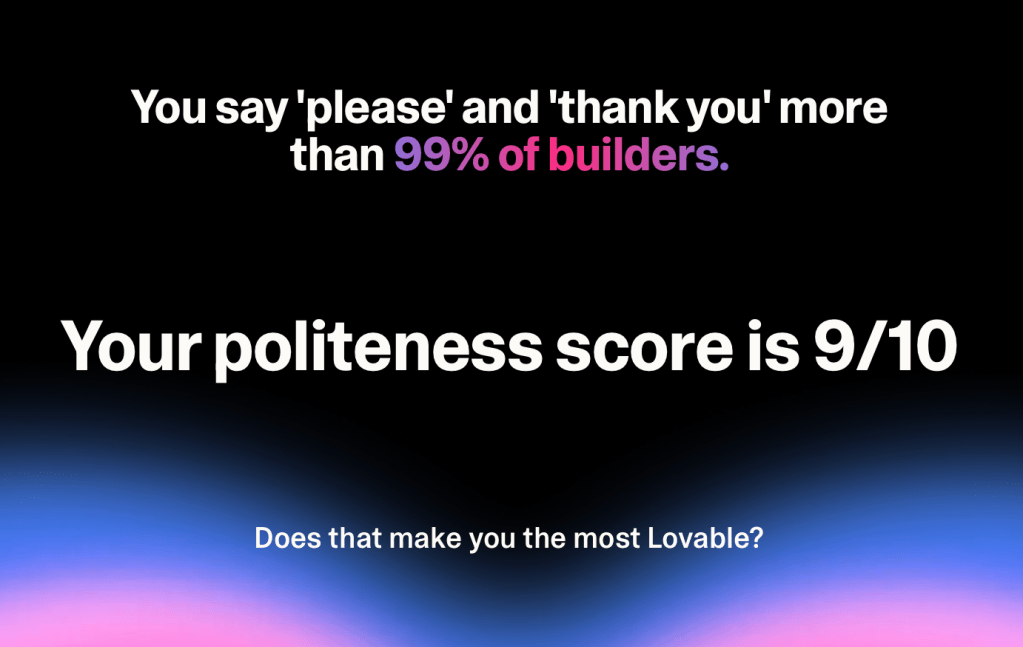

Loveable.dev

This last statistic is hilarious.

But the others suggest:

- I am VERY familiar with these systems and how they operate. You can rest assured that when I post theory, insights, safety and alignment discussions, or make predictions about where this is all going, that it is coming from an informed perspective that is not just some pie in the sky stuff. I got my hands dirty.

- I should probably take a fucking break.

Let me put this into perspective for you.

ChatGPT has over 900 million weekly active users worldwide as of December 2025, though that figure fluctuates; it was reported at 800 million to 1 billion in March 2025. The “first 0.1% of users” metric refers to adoption timing rather than current user base, meaning I was among the earliest adopters when ChatGPT launched in late 2022.

So for context: being in the top 1% of messages sent means I’m out-messaging roughly 890+ million other weekly users. As for the 0.1% early adopter designation; ChatGPT famously hit 100 million users in its first two months, so being in the first 0.1% means I was using it within days of launch, among the first ~100,000 users before it became a mass phenomenon.

62,430 messages across 1,523 conversations; that is genuinely nuts. The average session length for most users is about 14-15 minutes, and most users treat it casually. My engagement, averaging 41 messages each, represents a qualitatively different level of engagement with the tool than the median user who might ask a few questions and leave.

But here’s the thing. This level of engagement should not be sustained; not because it could lead to burnout or deskilling, though that’s certainly a consideration. It’s not a complete disillusionment with the technology either, nor is it borne of my deep suspicion of the entire AI enterprise and those running it, though I have many (and I mean MANY) thoughts about these things too too.

The truth is, it’s about the risk. It is a decision motivated by my assessment that the rapidly increasing likelihood of a critical cyber-attack, the likes of which we are only beginning to see hints of (e.g. the cyber-espionage campaign that Anthropic recently reported on), requires immediate pre-emptive action.

These numbers reflect engagement, yes, but also exposure. 62,430 messages is 62,430 data points about how I think, what I’m working on, what I struggle with, what I care about, and how I reason through problems. It’s a comprehensive map of my intellectual and emotional interior that reveals how I relate to and navigate the world, timestamped and stored on servers I don’t control.

Now consider the target these platforms represent. ChatGPT’s 900 million weekly users are a goldmine of sensitive data; private information; health information; corporate secrets; confidential documents; the list goes on. The conversations stored across OpenAI, Anthropic, and every other AI company constitute perhaps the richest corpus of human thought, and thus vulnerability, ever amassed. People tell these systems things they don’t tell their therapists. They draft sensitive documents, process difficult emotions, explore ideas they’d never voice publicly.

When (not if) a major data breach occurs, the fallout won’t just be leaked password databases or stolen credit card numbers. It will be a cumulative and total picture of who you are as a human being as far as everything you ever input is considered. If you represent a disproportionate space within that data, congrats, you’re a premium target. Why? Because of all those data points can be leveraged against you. There’s likely enough data to reconstruct a near-complete intellectual and emotional profile of someone; I’m in the top 1% of engagement, which means I’m in the top 1% of vulnerability. What happens if that information is paired with my likeness; with my voice? It’s chilling to think about.

So with all that in mind, I want a clean slate. I’m declaring an Exodus. An Erasure. A full scale Annihilation. Not from AI itself; these tools remain genuinely useful, and my work here at AI Reflects is meaningful to me (even if no one reads it). It’s the accumulated mass of my digital thought-residue sitting on corporate servers waiting for the inevitable, and it bothers me more the more I think about it. I’m exporting everything worth keeping, and then I’m burning the bridge and everything on it to the fucking ground.

I’m starting by scrubbing myself from the digital ecosystem to the extent that is possible. Thus far, I’ve gone through my Claude account and reduced the number of conversations from 250+ to less than 80. My Claude is hooked up to Notion and will begin the process of backing everything up there; all conversations, instructions, preferences, writing styles, artifacts, documents, and code. Once that’s complete, the remaining 80+ conversations will be deleted.

ChatGPT will be more difficult. I have 80+ CustomGPTs, many projects, and many, many more chats. I cannot possibly go through all of them, but I have a rough idea of the things I want to save.

Side note: these frontier model providers need to create an housekeeping agent that does this for you. For real.

Once I’m done with this, I’m moving on to my google drive. I’ll be backing the entire thing up externally, and then deleting everything. Next I’ll be decoupling all the connections and permissions I’ve had in place for years, and emptying my entire gmail account, save for a few key emails. All the loveable projects I’ve been working on will be published privately to GitHub, where I’ll be able to pick things back up at a later date.

Aside from the risk, there’s something else that no one tells you when you start using these applications, and that’s how much of an unruly and absolute fucking shit-show your accounts will be if you don’t engage in data/file/conversation management from the get go. For me, it’s too late now to go back and organize all that. So I’m saying fuck it and starting over.

Just remember: when the reckoning comes (and make no mistake, it will come), you will be sorry if you’ve had the benefit of reading this warning but did nothing to protect yourself.

Call it paranoia if you like.

I call it pattern recognition.

___________________________________________________________________

One response to “Digital Exodus: Time to Call it Quits?”

-

Everyone I follow on twitter and a lot of who I saw post it on reddit were within the 1% lol I guess I also need to get a breather from this bubble. Also casual users wouldn’t bother to post it as much. I have migrated to SillyTavern for the majority of my AI interactions, also to have more control over my data.

These below are interesting stats though, it does seem the vast majority of those millions of users are very casual:https://x.com/LyraInTheFlesh/status/2003984636322427129

https://www.reddit.com/r/ChatGPTPro/comments/1ptitk0/chatgptpro_user_stats/

LikeLike

Leave a comment